While researching a topic recently, a GenAI tool presented several relevant statistics. I asked it to fact-check the numbers and cite sources. With a confidence that might inspire envy, it assured me they were all correct, even providing citations with a precision rivaling scientific journals.

Except it was confidently wrong.

When I dug deeper, a few of the referenced reports were non-existent. And where the reports could be traced, the numbers it cited were nowhere to be found. The AI wasn’t just mistaken, it was misleading.

We now live in an age where producing a 500-page report takes mere minutes, thanks to AI. Yet, reviewing such a document for veracity and substance still demands days, even weeks, of human effort. This stark contrast highlights a crucial point: while the future may bring us self-correcting AI, for now, every AI-generated output requires human oversight. Humans must remain the first and last line of defense. Organizations that overlook this safeguard often find themselves humbled, and sooner rather than later.

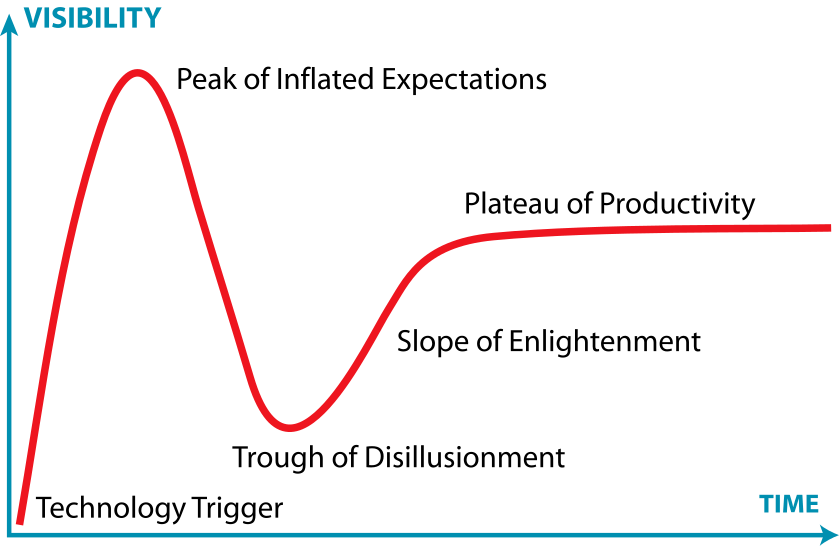

This situation places us squarely at what Gartner calls the “Peak of Inflated Expectations” in their Hype Cycle. While I’m convinced that AI meaningfully boosts productivity, its quality and reliability remain open questions. The machine can generate volume, but it’s human scrutiny that ensures truth and trust.

AI as Assistant, Not Oracle: The Courts Draw a Line

The need for human discernment isn’t limited to business or casual inquiry. Even the hallowed halls of justice are recognizing AI’s limitations. In a recent and notable move, the Kerala High Court in India issued a pioneering policy explicitly prohibiting the use of AI in judicial decision-making.

Why such a definitive stance?

As the Court’s policy articulates, “indiscriminate use of AI tools might result in negative consequences, including violation of privacy rights, data security risks and erosion of trust in the judicial decision making.” The message is clear: AI may assist, but it cannot replace humans. Legal reasoning demands human supervision, transparency, fairness, confidentiality, and accountability.

This sentiment is echoed globally. The European Union’s comprehensive AI Act classifies applications in sensitive domains like legal systems, hiring, and healthcare as “high risk.” The implication is clear: when a decision carries the weight of altering a human life, it must be rendered by a person, not merely a probabilistic language model.

To be fair, some argue that AI can eliminate certain human biases. It can offer consistency and efficiency where human judgment may falter. But only if the AI itself is built on unbiased, fully auditable data and processes. And even then, it requires human monitoring to interpret outcomes, course-correct errors, and preserve ethical integrity. The ultimate responsibility must stop with humans, not lines of code.

The Vanishing Privilege: AI, Therapy, and Personal Data

A surprising trend has emerged among Gen Z: using GenAI as a therapist. Influencers share mega-prompts designed for self-improvement, and the idea of a tireless, judgment-free digital confidant is gaining traction.

But the illusion of safety is just that: an illusion.

Sam Altman, CEO of OpenAI, offered a stark clarification: there is no legal confidentiality or privilege when one spills their heart to ChatGPT. Unlike doctors, lawyers, or licensed therapists, OpenAI is legally obligated to surrender your conversations if subpoenaed. So, whether you’re venting frustrations or sharing your most personal thoughts, those digital confessions could, conceivably, resurface in a lawsuit.

The intimacy and trust inherent in human therapeutic relationships, built on confidentiality and compassion, cannot be replicated by an algorithm.

This concern extends far beyond therapy. New York City’s AI Bias Law in Hiring now mandates independent audits for AI tools used in employment decisions. Who wants to lose a job opportunity to an opaque algorithm that may carry embedded bias? Our personal data, and the decisions that impact our lives, demand a level of scrutiny that AI, in its current form, cannot yet provide.

The Enduring Imperative of Human Oversight

AI, though powerful, is not infallible. It can certainly aid our endeavors, but our hand must remain on the steering wheel. AI must be guided by our unique human capacities for critical thinking, ethical reasoning, and nuanced understanding.

In fact, human oversight may well be the defining value in a world inundated by artificial output. Consider Gartner’s prediction: by 2028, 70% of marketing budgets may pivot back to offline channels, a direct consequence of a collective digital detox. As AI saturates digital spaces with manufactured content, the premium on authentic, human-crafted interaction will only rise.

Perhaps nowhere is this shift more evident than in functional communication, particularly email. When lengthy prose is no longer a signal of effort, given how effortlessly AI can generate it, the new mark of care is the inverse: respecting the reader’s time through brevity, clarity, and thoughtful intention. In the coming years, it may not be what we write that matters most, but how much of it we choose to edit.

Responsibility Is Not Optional

As AI advances, the temptation to outsource judgment is real. But speed without scrutiny is dangerous. AI may change how we create, communicate, and decide. But it cannot replace human responsibility.

Human oversight is the foundation upon which ethical, accountable, and trustworthy use of AI must rest. And it has to remain non-negotiable. At least as long as we value truth over speed, accountability over automation, and people over programs.

Leave a comment